Recently, I’ve discovered something that has completely changed how I think about AI-assisted development: the most productive AI coding experience isn’t necessarily the one where you’re constantly interacting with an AI assistant. It’s the one where AI works autonomously while you focus on other tasks.

What Are Autonomous Coding Agents?

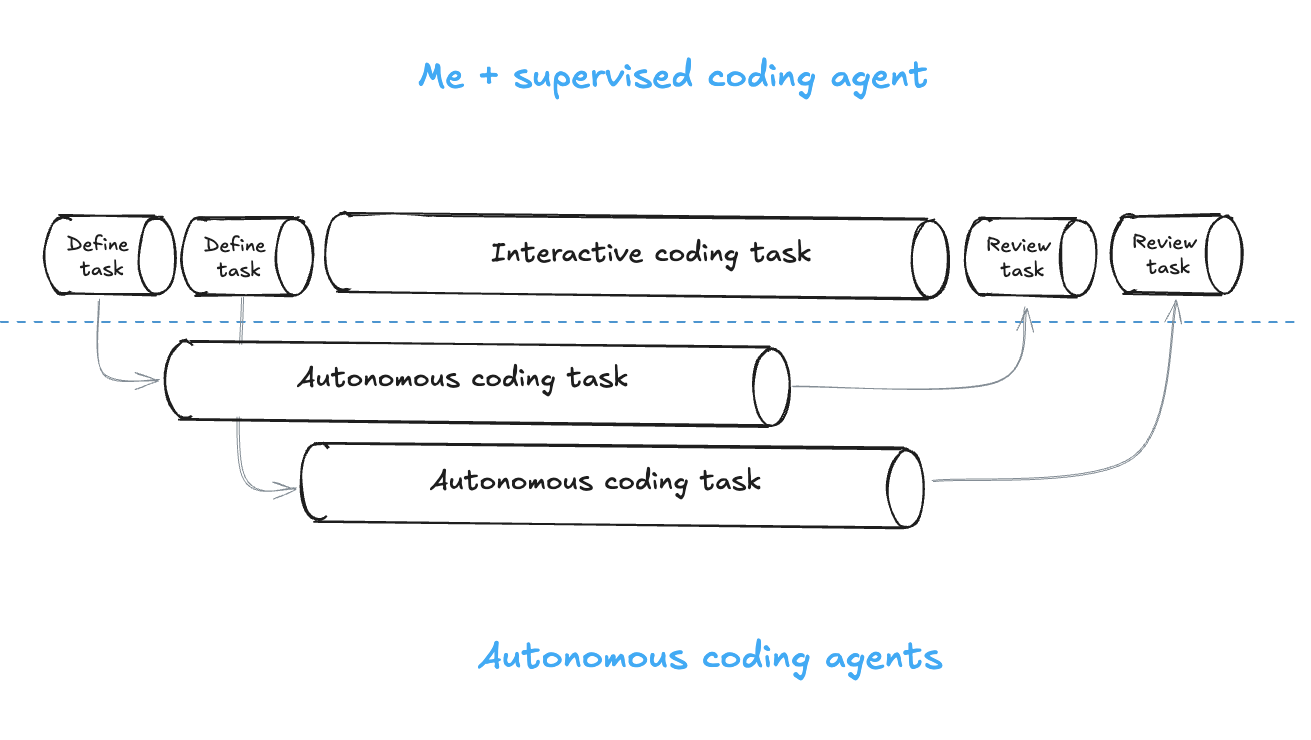

Until recently, most of my AI-assisted code was written by a supervised coding agent, Cursor. Autonomous coding agents are very different:

- They work in the background, unsupervised.

- They operate in the cloud rather than locally in your IDE or terminal.

The idea is that you give the agent a clear task and leave it alone to complete it - it’ll create a PR for you to review when finished. You can even give the agent a task from your phone!

I’ve used Devin, OpenAI Codex, Google Jules, and, more recently, Cursor background agents.

Initial Skepticism

AI tools like Cursor and GitHub Copilot revolutionized my coding speed. I was amazed, firstly, by the super-smart autocomplete and then again when supervised agents were able to make robust code changes that I requested. I especially love asking the agent to make UI changes and, seconds later, seeing the changes in real-time, allowing for quick iterations. I felt so much faster than I did a year ago because of the ability to quickly translate my thoughts into working code.

Devin was the first autonomous coding agent I used, and I was skeptical. My initial thought was: “Why would I want this? It’s much slower and far more expensive than Cursor?” This was back in March 2025—the pricing has changed quite a bit since then!

I initially dismissed Devin because I was thinking about it incorrectly. Firstly, I assumed I needed to sit there and hand-hold it, essentially making it a slow, interactive tool. I then tried giving Devin a couple of large, meaty tasks—my thinking was that it needed to do something significant if I was going to leave it for an hour or so. Obviously, this approach didn’t work out well.

The breakthrough came when I realised that background agents aren’t about speeding up your current workflow—they’re about parallel processing.

How Autonomous Coding Agents Increase Productivity

The key insight for me was shifting from thinking about speed to thinking about throughput. Yes, watching Devin implement a feature takes longer than doing it myself with Cursor. But here’s what I was missing: while Devin works on Task A, I can be productive on Task B using the Cursor supervised agent. Even if the tasks given to an autonomous agent are simple, small tasks, parallelization can significantly increase productivity.

It’s not about individual task speed—it’s about getting multiple things done simultaneously.

This realization was a powerful productivity multiplier.

What Types of Tasks to Give to Autonomous Coding Agents

As you can see from the diagram above, I still need to do some work on tasks I give to autonomous coding agents:

- I spend time constructing a clear, thorough prompt for the task.

- I spend time reviewing and testing the code.

To gain a good productivity boost, the agent needs to complete the task in one shot. Time spent tweaking the generated code or re-prompting hurts productivity.

The tasks need to be small and simple. They are usually straightforward changes I could make if I had the time. Usually, I’ll have a decent idea of where the code changes need to be made. So, a lot of the time, the tasks are simple features or fixes. Small code refactoring and documentation are also good autonomous tasks.

An interesting process side effect of using autonomous coding agents is when you find a bug while working in a different area of an app—instead of backlogging it, you just ask an autonomous coding agent to fix it. No more endless backlogs!

What Not to Give Autonomous Coding Agents

As mentioned before, I avoid complex tasks that can’t be done in one shot. Tasks that need research or in-depth thinking, I’ll keep for myself, asking an AI tool questions where needed.

Personally, being frontend-focused, when building new screens, I like to start the UI work myself with a supervised agent. I like to quickly iterate until the screen looks and feels right. Once the basics of the screen are in place, I’m happy to hand off to an autonomous agent to do minor UI work.

Maximising Throughput

The autonomous coding agents work in an environment in the cloud. For maximum impact, the environment should be set up like your local environment—this not only includes the code but any tools such as local databases and services. For complex apps, this can take time, but it’s worth it. The environment persists as well—there is no need to do the setup before each task.

The reason for the setup is so that the autonomous coding agent can execute a build, perform linting checks, and run tests—it can even run the app. Just like a human developer, the autonomous coding agent will want to do these operations to validate the changes it makes. If something fails, it’ll dig into the problem and try to resolve it, adjusting the code. So, linting and a solid test suite help the autonomous coding agent be successful.

Another way to maximise throughput is to avoid parallel tasks in the same area of code; otherwise, you end up spending time dealing with merge conflicts. I usually mentally assign agents to areas of code and give them tasks in those areas, just like a human team would organise themselves.

When I set the agent off, I usually watch it for a minute to see if it has any questions or is confident enough to do the task. I love Devin’s confidence rating—when I see that green circle, I know it’s safe to leave it to complete the task.

While the autonomous coding agent is working away, I do my best to keep it out of my mind and focus on my task. I want to avoid expensive context switching.

When I’ve finished my task, I’ll review and test an autonomous coding agent’s task. Usually, I’ll be creating and merging the PR. Occasionally, I will make some minor adjustments. I rarely abandon the PR. Code changes or abandonment obviously reduce throughput.

I’m finding I’m using 2 or 3 autonomous coding agents in parallel. This allows me time to carry out a complex task or some research. You can probably have more parallel tasks if all the coding tasks are straightforward without any research needed—you’ll then be spending all your time defining, reviewing, and testing tasks.

Why Aren’t More Developers Using Autonomous Coding Agents?

Devin’s been around for a while, but it was expensive. I was lucky to use it at work. Devin’s also built by Cognition, a startup rather than a household name. I’m not sure about their marketing as well—to me, the tool comes across as a developer replacement, which obviously won’t appeal to many developers!

OpenAI Codex, Google Jules are newer, and Cursor’s background agent is even newer. So, I don’t think autonomous coding agents have had time to really catch on yet.

Lastly, developers may misunderstand the value of autonomous agents like I initially did, missing the key point about getting work done in parallel.

Which One Should I Use?

I’ve had a lot of success with Codex and Devin. Cursor’s background agent seems good as well, but I’ve not used it as much because it hasn’t been around long.

The only one that didn’t work well was Google Jules, but that was a couple of months ago, and I’ve not tried it recently. You can’t argue with free, though!

Speaking of pricing, they’re all currently pretty affordable. At the time of writing this post:

- If you’re on Chat GPT Plus ($20 PM), you get access to Codex.

- Devin now starts at $20, and you can get $75 of credits free to celebrate the Windsurf acquisition.

- Cursor’s background agent is included in Cursor Pro ($20 PM). You’ll need to keep within the usage allowance, though.

- Google Jules is free as it’s still in beta.

I’d encourage you to give some of these a try and see if they make you more productive.